Measuring the success of UX work for an e-commerce site with millions of hits a month can be relatively straightforward. If a change is implemented in the morning, and sales go up in the afternoon, it’s worked. (I simplify a little.) And with that many hits, A/B testing is a potentially powerful resource.

Producing quantitative evidence of UX success is not always so simple. For example, a recent UX review I led for Kings Court Trust concerned an online portal for our business partners. Sign-up rates, purchasing rates, amount spent, bounce rates, hit count – none of these measures applied. There were too few users for reliable A/B testing. So, to track success – or otherwise – I needed a different approach.

I relied on benchmarking. That means establishing a base level (a ‘benchmark’) and making an evaluation by comparing results to that benchmark. It’s how we judge whether sign-up rates, amount spent etc, have gone up or down, but for this project – in the absence of those measures – I used user satisfaction ratings.

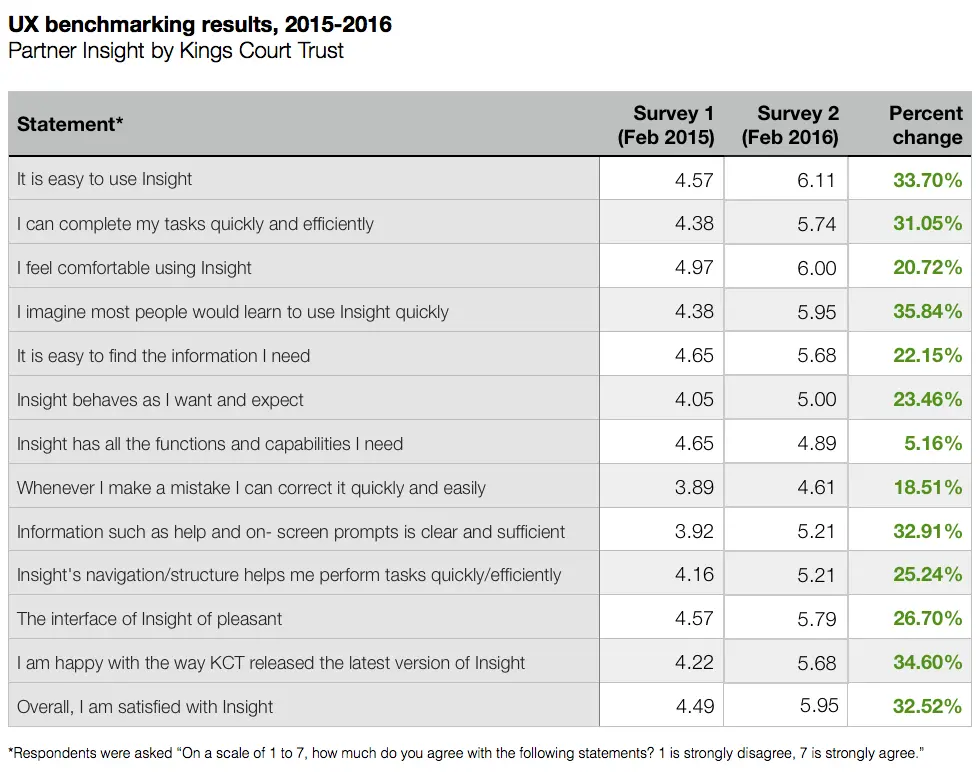

I had to plan ahead. Before the project began (Feb 2015), I sent a survey to users, our business partners. A number of questions helped to formulate my UX review (to do with tasks, usage, etc), while others focused purely on benchmarking. I provided a number of statements (for example, ‘I can complete my tasks efficiently’, ‘It is easy to use Insight’) and asked users how much they agreed, from 1 to 7.

(Asking these questions couldn’t give me the evidence I needed to formulate solutions – for that, I undertook a number of usability tests with partners.)

9 months later, we launched a new version of the portal (Insight), which the development team had significantly overhauled based on my recommendations. 3 months after that, when users had got used to the new system, I sent a follow-up survey with the same benchmarking questions.

Benchmarking results

The results were as follows:

UX benchmarking results table, showing weighted averages for each survey out of 7

UX benchmarking results table, showing weighted averages for each survey out of 7

The average increase was 25.7%. Agreement with the key statement “Overall, I am satisfied with Insight”, increased 32.5%. In a number of cases the level of agreement increased by over 30%.

While there is still room for improvement, the percentage increases are significant. They very much indicate a successful project.

This was a hugely importance exercise, because:

- It proved the effectiveness and importance of UX (particularly significant when you consider that I am the first UX specialist employed by Kings Court Trust and this was my first project)

- For Kings Court Trust, it provided an indication of return-on-investment, with success justifying the cost and development resource

- It provided quantitative evidence, as opposed to qualitative word-of-mouth feedback, that the users – our business partners – appreciated the changes, an important aspect of our commercial relationship with them

- It will help to guide and justify future UX work

- It identifies areas for further improvement

- It motivates the team

Naturally, I made a big deal of these results within the company. And it was not just about me; our developers worked overtime to implement the changes, and a number of others were involved from marketing to project management. It was a fantastic team success, and these results vindicate our effort.

In this case, it’s worth noting that we did not decide in advance what constitutes success. Would it have been a successful project if mean satisfaction rates went up by 5%, 10%, 20%, 30%…? This can be difficult, but it’s a useful step that we’ve begun to implement on more recent projects. Though for this project, I don’t think we lost out by not doing so.

Design and UX have subjective elements, and this can cloud the need for objective measurements. Often, it simply doesn’t happen. But in my view, objectively measuring the success of UX work, in whatever way works for a particular project, is a critical part of the process. It justifies our work, proves our worth to employers and clients, and provides valuable metrics which can guide future projects. After all, we’re in the results business.

That means planning ahead and considering how the success of the project will be measured even before it begins.

Useful UX benchmarking resources:

https://www.usertesting.com/blog/2015/01/05/benchmarking/

http://www.userzoom.co.uk/competitive-ux-benchmarking/

http://www.userfocus.co.uk/articles/guide-to-benchmarking-UX.html

https://disciullodesign.wordpress.com/2013/12/08/6-steps-for-measuring-success-on-ux-projects/